I have been attending international conferences about consciousness for nearly two decades (example, www.consciousness.arizona.edu/ ), where hundreds and hundreds of scientists and philosophers have discussed what consciousness might be, with no resolution. There are many “theories” of consciousness (https://en.wikipedia.org/wiki/Consciousness ), some more developed than others.

I have been attending international conferences about consciousness for nearly two decades (example, www.consciousness.arizona.edu/ ), where hundreds and hundreds of scientists and philosophers have discussed what consciousness might be, with no resolution. There are many “theories” of consciousness (https://en.wikipedia.org/wiki/Consciousness ), some more developed than others.

When I use the term consciousness, I mean the kind of personal subjectivity that I have myself. I am conscious and I know it and you can’t talk me out of it. As Descartes said more succinctly, “I think, therefore (I cannot doubt that) I am.”

To those theorists who deny that there is such a thing as subjective consciousness and say it is mere delusion, I reply, “Why are you talking then?” To deny the existence of consciousness makes as much sense as the person who loudly declares, “I do not exist!” It’s like an argumentative solipsist. All you can do is walk away.

For the rest of us who admit the obvious, the problem of consciousness is, “What is it?” About the only scientific answer we have so far is that, whatever it is, it seems correlated to brain activity somehow (and more broadly, to bodily activity, the brain being part of the body of course).

If the brain/body is severely altered, a person reports changes in experience. Likewise, though less dramatically, experience can cause changes in the brain/body. For example, exercise can build up your muscles and learning things results in changes in brain structure and function.

If the brain/body is severely altered, a person reports changes in experience. Likewise, though less dramatically, experience can cause changes in the brain/body. For example, exercise can build up your muscles and learning things results in changes in brain structure and function.

So it is clear that consciousness is somehow related to its embodiment. Critically we must always bear in mind that correlation is not causation. Two phenomena, A and B, can be related in several ways:

- A causes B (even if through a complex pathway of events, even if A causes much else besides B).

- B causes A.

- A and B cause each other interactively. (as in electromagnetic propagation).

- Some third factor, C, causes both A and B to appear correlated (as the number of churches in a town is correlated to the crime rate).

Despite this common caution about interpreting correlation, theorists of consciousness often forget the rules and suggest, imply, or state outright that the brain causes consciousness. No scientific evidence demonstrates that. It is a mere opinion. Nevertheless, that is the dominant assumption about the nature of consciousness today, and it is not only invalid, it is patently wrong.

Despite this common caution about interpreting correlation, theorists of consciousness often forget the rules and suggest, imply, or state outright that the brain causes consciousness. No scientific evidence demonstrates that. It is a mere opinion. Nevertheless, that is the dominant assumption about the nature of consciousness today, and it is not only invalid, it is patently wrong.

How do I know the brain does not cause consciousness? Because brains and consciousness have such different properties that there is no way one could cause the other. Consciousness is not physical. Experience has no mass, volume, conductivity, or temperature. Brains, being physical, have all those properties and more. What are the physical dimensions of an idea? The question cannot be answered. Brains and ideas are incommensurable.

For a brain to cause, create, or generate conscious experience it would have to violate several laws of physics, such as the conservation of matter and energy. It is not even conceptually plausible that a brain could cause consciousness. All we can properly say is that there is a strong correlation between brain activity and consciousness. When one occurs, often the other does too. How can we explain that correlation? We can’t. We must learn to live with that fact.

The impossibility of a causal connection between brain and consciousness does not, however, deter theorists of consciousness, who blithely carry on with presuppositions that cannot, in principle, be true. They do this by invoking ambiguous language that projects consciousness into the brain, reifies it, then “discovers” it there. This linguistic trick is probably not intentional, not meant to deceive. It is just a bias that cannot be squelched.

I have become attuned to such sleights of hand, which I call weasels. That’s why I say that most theories of consciousness amount to a bag of weasels.

I have become attuned to such sleights of hand, which I call weasels. That’s why I say that most theories of consciousness amount to a bag of weasels.

Let’s look at a couple of recent articles about the nature of consciousness to see how this bag of weasels appears.

A recent article in the prestigious science journal, Nature, takes on the question of what is consciousness. (www.nature.com/articles/d41586-018-05097-x ). “What is Consciousness” by Christof Koch. Koch has been a regular presenter at the consciousness conferences mentioned earlier and a contributor to the Journal of Consciousness Studies (www.imprint.co.uk/product/jcs/ ).

The article begins by dismissing those who deny the existence of subjective experience (Hooray!) and goes on to say that we can only properly speak of the neural correlates of consciousness (NCC). (Double hooray).

And yet, very quickly, the first weasel is let out of its bag as the author asks, “What must happen in your brain for you to experience a toothache, for example?”

The question presupposes that to experience pain, something must first happen in the brain. The implication is that the brain causes the experience. But that has not been established at all. Yet there it is: brains cause experience. First weasel on the loose!

What alternative assumption do we have? One might well ask. I don’t know, but I can say that pain is particularly difficult to pin to a specific bodily correlate. That’s why we have so many backache clinics and conceptual categories like psychosomatic pain. That’s why it’s so hard to say exactly where the pain is. Is the pain even in a tooth or is it in the brain, or somewhere else? This is why doctors have difficulty understanding where the patient hurts and by how much. The author should have stuck to what can be said without self-contradiction: Pain is often correlated to brain activity.

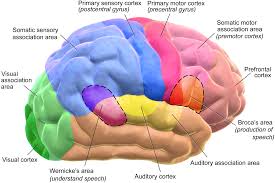

A short time later, the author reports that strong magnetic stimulation of the scalp over the posterior cortex causes a measurable spreading activity across the cortex, which is often correlated with reported flashes of light, geometric shapes, distortions of faces, auditory or visual hallucinations. He concludes …

So it appears that the sights, sounds and other sensations of life as we experience it are generated by regions within the posterior cortex.

Excuse me? Experience is “generated” by the cortex? That is an unsupported conclusion. All that was observed was a correlation between cerebral activation and reported experiences. Two weasels out of the bag! All stations, I repeat: We havetwo weasels on the loose.

Excuse me? Experience is “generated” by the cortex? That is an unsupported conclusion. All that was observed was a correlation between cerebral activation and reported experiences. Two weasels out of the bag! All stations, I repeat: We havetwo weasels on the loose.

Koch then reports a study that found that measuring the electrical activity of the cerebral cortex could be correlated to other behavioral measures of consciousness.

Unable to take such correlates at face value however, the author then asks,

“What is it about the biophysics of a chunk of highly excitable brain matter that turns gray goo into the glorious surround sound and Technicolor that is the fabric of everyday experience?”

Who said it did? There is no scientific evidence showing that brain matter turns goo into experience. He just made that up. Ah-oo-ga! Ah-oo-ga! A third weasel has escaped!

And so the article goes on, weasel after weasel. I take no joy in catching weasels. I really would like to find a good answer to the question of what consciousness is. So far, all I have is an empty burlap sack and dozens of weasels on the loose.

Let’s look quickly at one more article on the topic, just to demonstrate that I am not picking unfairly on Chris Koch. Stanislas Dehaene, Hakwan Lau, and Sid Kouider have a recent article in the prestigious journal, Science, called “What is Consciousness and Could Machines Have it?” (http://science.sciencemag.org/content/358/6362/486.full ).

The authors assert that “consciousness is an elementary property that is present in human infants as well as in animals.” They don’t say, but presumably they mean consciousness is a property of brains. This implausible assertion of a supernatural ability of brains is laid down for us to accept without evidence.

The authors then propose consciousness has two essential operations: One (C1) is the selection of an object of thought for further processing, thus making it available for computation and report. The second (C2) is the self-monitoring of those computations, leading to a subjective sense of certainty or error.

They go on to ask, “How could machines be endowed with C1 and C2 computations?”

Wait, I can answer that: Machines can’t be so ‘endowed.’ Why? Because the authors have not defined what consciousness is, except to say it is a magical property of brains. They have not said what a “mental object” of thought is, nor how a configuration of neurons could “represent” anything in a non-metaphorical way. They also have not defined what a “subjective sense of self” is. As a practical matter, you cannot “endow” computational machines with such airy ideas.

But the authors find only technical, not conceptual problems standing in the way of machine consciousness. They insist that:

“What we call ‘consciousness’ results from specific types of information-processing computations, physically realized by the hardware of the brain.”

If they literally believe that subjective consciousness, including their own, is no more than the clacking of gears and levers, we should stop reading because their words could have no intrinsic human meaning.

Nevertheless, the auhors go on to note that:

“Current cars or cell phones are mere collections of specialized modules that are largely ‘unaware’ of each other. Endowing this machine with global information availability (C1) would allow these modules to share information and collaborate…”

They explain, “We call ‘conscious’ whichever representation, at a given time, wins the competition for access to this mental arena and gets selected for global sharing and decision-making…”

However, why any sort of networking would cause anything to “become conscious” is a mystery. How would that work? Would there be a pop and a flash of light and the “selected” set of neuronal connections would suddenly “be conscious?” Maybe a little puff of smoke happens, too?

That is one giant, killer weasel on the loose, one which should not be allowed near the buildings of Tokyo.

The authors note that ” Most present-day machine-learning systems are devoid of any self-monitoring…” and that this deficiency should be corrected. What a conscious machine would need is ” a secondary network [to] ‘compete’ against a generative network so as to critically evaluate the authenticity of self-generated representations.”

How a secondary network would give conscious machines “an immediate sense that their content is a genuine reflection of the current state of the world,” is unclear. If a machine were to have that sense of the world, it could only arise by magic.

Do these authors take responsibility for unleashing such a bag of vicious weasels? Not at all. In their conclusion, they humbly state that:

“…we surmise that mere information-theoretic quantities do not suffice to define consciousness unless one also considers the nature and depth of the information being processed…Are we leaving aside the experiential component (“what it is like” to be conscious)? Does subjective experience escape a computational definition? … those philosophical questions lie beyond the scope of the present paper…

Do they indeed? Look at the paper’s structure. It makes a raft of unexamined and unjustifiable philosophical assumptions and proceeds into pseudo-scientific explanations that are really just opinion pieces. If philosophical objections come up, the authors throw up their hands and say “Hey, we’re no philosophers!” It’s a kind of game. The weasel game.

The authors do not intend to deceive. They genuinely are, like their hypothetical computing machines, self-blind. The article concludes with this patently false statement:

“Although centuries of philosophical dualism have led us to consider consciousness as unreducible to physical interactions, the empirical evidence is compatible with the possibility that consciousness arises from nothing more than specific computations.”

There are more weasels than I can count in scientific articles about consciousness. I have picked just two by famous authors in prominent scientific journals, as examples. When I come to papers at conferences or printed in many other journals, I despair. It’s Weasel City, Arizona.

Despite what experts would have you believe, there is no coherent theory or hypothesis in the mainstream scientific literature that gets us even close to an explanation of what consciousness is. For psi-fi authors, chaos is opportunity. Opportunity with caution.

Psi-fi is supposed to be more-or-less realistic, which means we exaggerate the known facts, but we don’t make up stuff wholesale (except where literary license requires). So you can pick any extant theory of consciousness and stick to it, but you really should avoid the most egregious weasels.

Psi-fi is supposed to be more-or-less realistic, which means we exaggerate the known facts, but we don’t make up stuff wholesale (except where literary license requires). So you can pick any extant theory of consciousness and stick to it, but you really should avoid the most egregious weasels.

If you have an android with full subjective consciousness, you need to own that and not pretend that its “brain” or CPU magically emits consciousness as an output. You’ll need an explanation of how you squeezed consciousness out of silicon. Or you could go with one of the theories that has consciousness built into everything from the start. But then you’ll have to deal with introspective rocks and cogitating cantaloupes. And still avoid the weasels.